Architecture

Overview¶

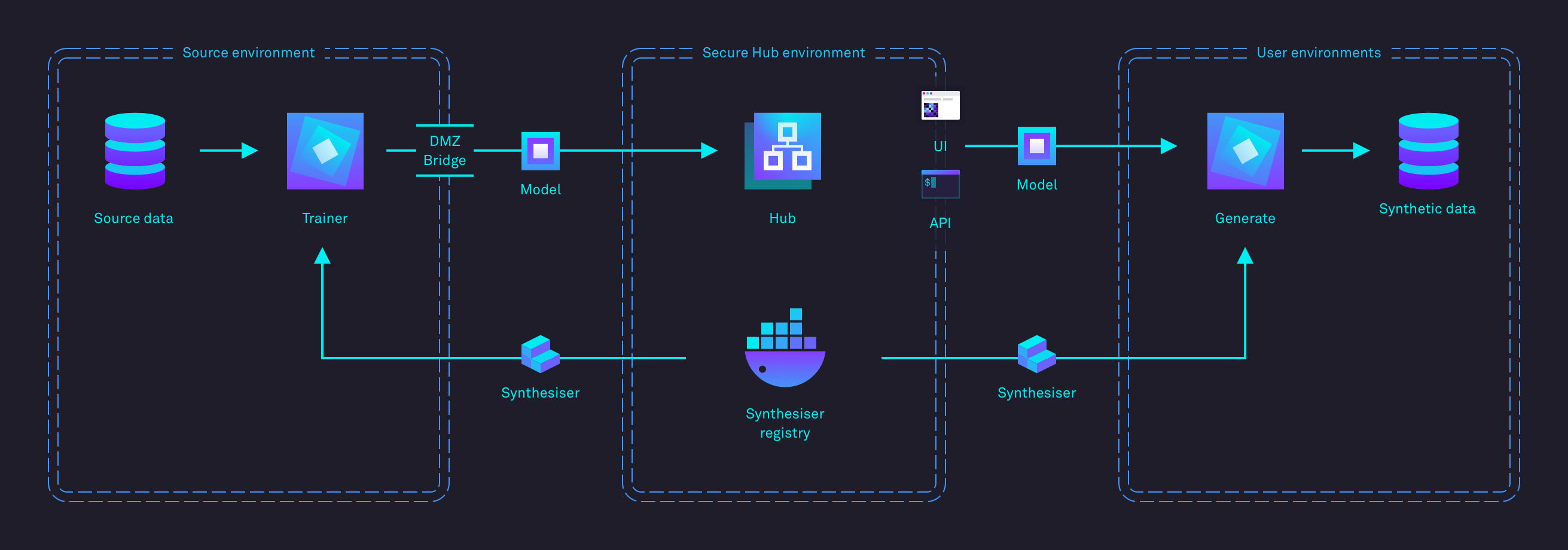

Hazy is enterprise software comprised of multiple components that allow for seamless integration with your network and security infrastructure.

These components are installed into our customers' on-premises, private cloud or hybrid network.

Instead of transmitting data, Hazy’s synthetic backbone deals entirely with Generator Models. This maintains data and network security because production data never leaves the trusted environment and our client-hub infrastructure allows for the generation of any amount of safe, synthetic data on-premises or in the cloud.

Components¶

A Hazy installation is comprised of multiple components that can be deployed independently if necessary to allow for extremely flexible deployments supporting multiple use-cases.

The key elements of this system are the:

Hub¶

The Hub is the centre of your Hazy installation. It can be accessed using a web UI as well as an HTTP api.

It provides the following features:

- Configuration workflow enables analysis of source data and subsequent refinement of table and column definitions to produce a Configuration prior to model training.

- Training workflow enables invocation of model training jobs and subsequent exploration of model metadata.

- Generation workflow enables invocation of synthetic data generation jobs.

- Model registry enables access and exploration of historic generator models.

- Role Based Access Control enables the hub admin to control what activities can be performed by users depending on their role within the organisation.

Metrics¶

The model registry allows the user to explore the model metrics. This enables a user to view detailed graphical measurements showing representative information about the similarity, utility and privacy of the synthetic data produced by individual generator models.

Synthesiser¶

The Synthesiser is the component that sits next to the production data to train a new Generator Model.

Once trained this Generator Model is added to the hub making it available for synthetic data generation. The Synthesiser can subsequently be used to take the Generator Model and create a synthetic data set.

Dispatcher¶

In the distributed architecture style deployments, depending on the level of network segmentation employed, messages may be sent using a secure message queue between the hub and the Dispatcher, enabling the Dispatcher to create training and synthesis jobs in its local environment. This enables a degree of autonomy within the Hazy ecosystem without compromising security principals.

Installation¶

Different Hazy components can be installed via single container installation, standalone synth deployment, distributed architecture or can be setup quickly using an AWS marketplace installation.

Data Transfer¶

To provide access to trained Generator Models they must be accessible on the same network as the process that is generating synthetic data.

The training process requires access to production data, so generally runs within a DMZ environment separate from the company WAN.

The mechanism by which the trained Generator Models are moved out of the DMZ containing the production data and into the more open network hosting the Hub is one of the core deployment decision Hazy customers must make.

Nomenclature¶

- Synthesiser

- Generator Model

- Configuration

A model training pipeline that ingests the source data and uses it to train a Generator Model. Packaged as a Docker/OCI container image. Read more

The serialised set of statistical properties of the source data, sufficient to re-create a synthetic version of the original.

The set of parameters used to train a model from input data.